Image editing using instruct-pix2pix#

In this notebook we will modify a given image using instructions. These instructions allow us to change image characteristic while keeping the image content. This might be useful for producing multiple similar example images and studying if algorithms, e.g. for segmentation, are capable to process these image variations. We will be using the model instruct-pix2pix.

import PIL

import requests

import torch

from skimage.io import imread

import numpy as np

import stackview

from skimage.transform import resize

import matplotlib.pyplot as plt

from diffusers import StableDiffusionInstructPix2PixPipeline, EulerAncestralDiscreteScheduler

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(

"timbrooks/instruct-pix2pix",

torch_dtype=torch.float16, safety_checker=None)

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

image_np = imread("../../data/blobs.tif")

# the model prefers images of specific sizes

scaled_image_np = resize(image_np, (256, 256), preserve_range=True).astype(image_np.dtype)

We need to convert our data in a Pillow image, a common input format.

image_rgb_np = np.asarray([scaled_image_np, scaled_image_np, scaled_image_np]).swapaxes(0, 2).swapaxes(0, 1)

image = PIL.Image.fromarray(image_rgb_np)

image

We can then edit the image using a prompt.

result_image = pipe(prompt="blur the image",

image=image,

num_inference_steps=5,

guidance_scale=1,

image_guidance_scale=1

).images[0]

stackview.curtain(np.array(result_image), scaled_image_np)

stackview.insight(np.array(result_image))

|

|

|

Note that the model is not trained on scientific images specifically. Thus, it may not know how to edit images using prompts that contain scientific image processing terms. It is capable of modifying images using common terms.

result_image = pipe(prompt="apply a median filter to the image",

image=image,

num_inference_steps=5,

guidance_scale=1,

image_guidance_scale=1

).images[0]

stackview.curtain(np.array(result_image), scaled_image_np)

stackview.insight(np.array(result_image))

|

|

|

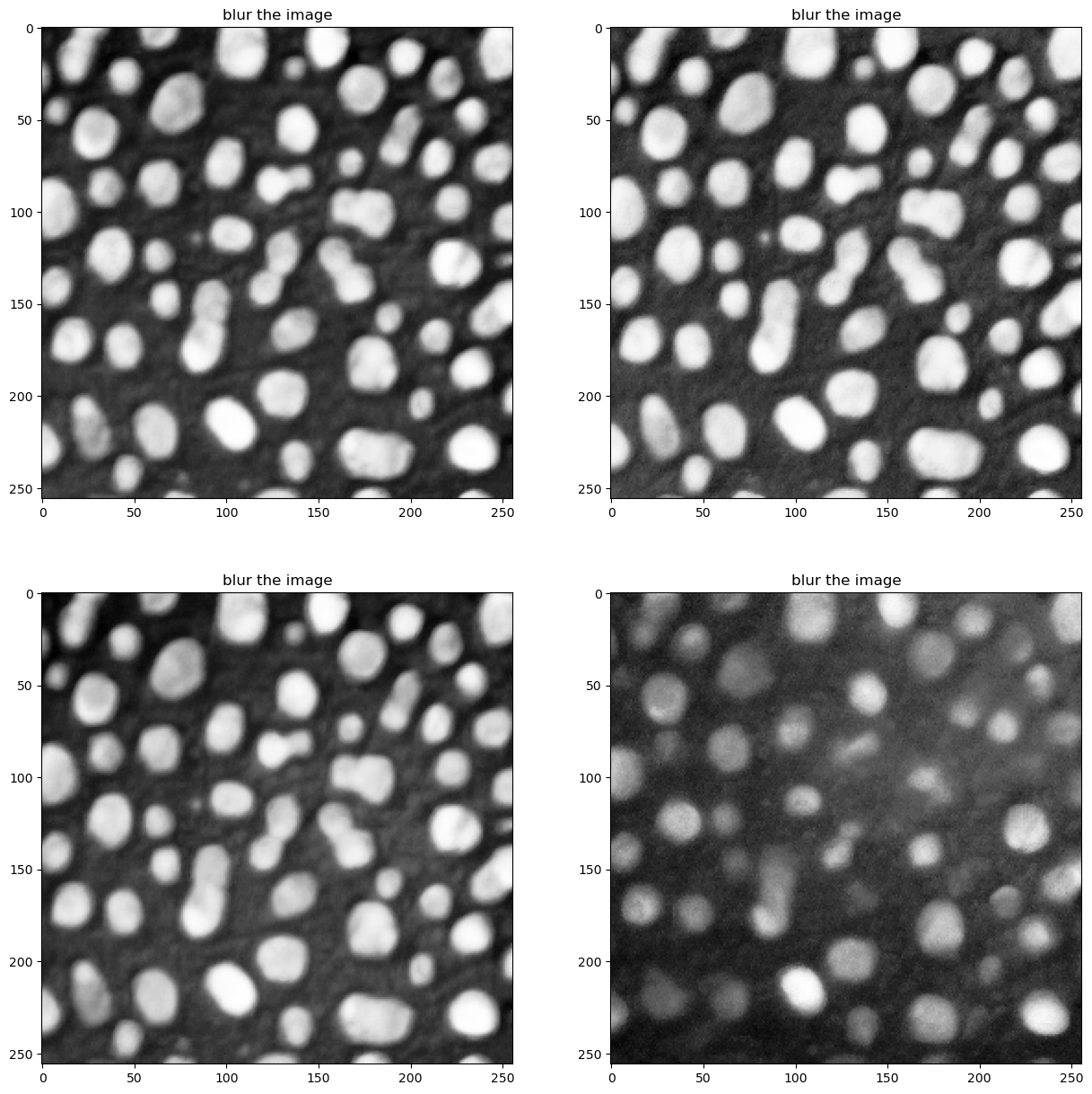

Reproducibility#

Some prompts are more reproducible than others. We can visualize this by calling the same prompt multiple times.

def display_panel(image, prompts):

fig, axes = plt.subplots(2, 2, figsize=(15,15))

for i, prompt in enumerate(prompts):

result_image = pipe(prompt=prompt,

image=image,

num_inference_steps=5,

guidance_scale=1,

image_guidance_scale=1

).images[0]

axes[int(i/2), i%2].imshow(np.array(result_image)[:,:,0], cmap="Greys_r")

axes[int(i/2), i%2].set_title(prompt)

plt.show()

display_panel(image, ["blur the image"] * 4)

Exercise#

Generate more images using example prompts. How reproducible are the results?

prompts = ["apply a median filter to the image",

"deconvolve the image",

"blur the image",

"despeckle the image"]