Merging objects using machine learning

The ObjectMerger is a Random Forest Classifier part of the apoc library that can learn which labels to merge and which not. It allows post-processing label images after objects have been (intentionally or not) oversegmented.

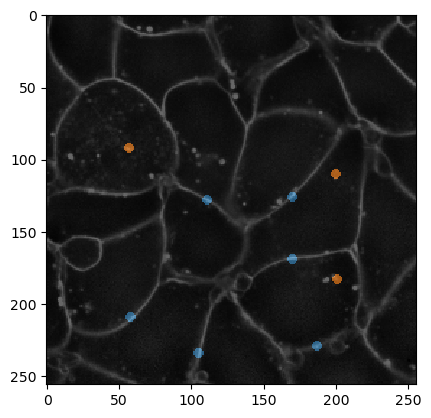

A common example can be derived from an image showing intensities in cell membranes.

|

cle._ image

| shape | (256, 256) |

| dtype | float32 |

| size | 256.0 kB |

| min | 277.0 | | max | 44092.0 |

|

As the membranes have different intensity depending on the region in the image, we need to correct for this first.

|

cle._ image

| shape | (256, 256) |

| dtype | float32 |

| size | 256.0 kB |

| min | 0.15839748 | | max | 11.448771 |

|

For technical reasons it is also recommeded to turn the intensity image into an image of type integer. Therefore, normalization might be necessary. It is important that images used for training and images used for prediction have intensities in the same range.

|

cle._ image

| shape | (256, 256) |

| dtype | uint32 |

| size | 256.0 kB |

| min | 1.0 | | max | 54.0 |

|

An annotation serves telling the algorithm which segmented objects should be merged and which not.

|

cle._ image

| shape | (256, 256) |

| dtype | uint32 |

| size | 256.0 kB |

| min | 0.0 | | max | 2.0 |

|

For visualization purposes, we can overlay the annotation with the membrane image.

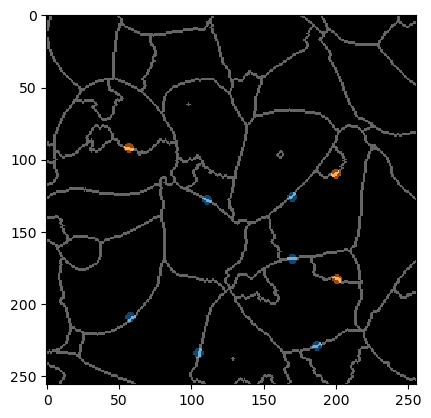

To show more closely what needs to be annotated, we also overlay the label-edge image and the annotation. Note that the edges which are not supposed to be merged are small dots always carefully only annotating two objects (that should not be merged).

Training the merger

The LabelMerger can be trained using three features:

touch_portion: The relative amount an object touches another. E.g. in a symmetric, honey-comb like tissue, neighboring cells have a touch-portion of 1/6 to each other.

touch_count: The number of pixels where object touch. When using this parameter, make sure that images used for training and prediction have the same voxel size.

mean_touch_intensity: The mean average intensity between touching objects. If a cell is over-segmented, there are multiple objects found within that cell. The area where these objects touch has a lower intensity than the area where two cells touch. Thus, they can be differentiated. Normalizing the image as shown above is key.

centroid_distance: The distance (in pixels or voxels) between centroids of labeled objects.

Note: most features are recommended to be used in isotropic images only.

|

cle._ image

| shape | (256, 256) |

| dtype | uint32 |

| size | 256.0 kB |

| min | 1.0 | | max | 31.0 |

|