Scaling#

Image scaling is a smart way for preprocessing image data, ideally after noise and background removal. It allows reducing image size to a degree that the scientific question can still be answered, while also dealing with memory constraints. E.g. in case the computer complains about out-of-memory errors, scaling an image to a smaller size is the first go-to method to proceed with a project. Furthermore, there are many segmentation algorithms and quantitative measurement methods which have pixel/voxel isotropy as precondition: Voxels must have the same size in all directions, otherwise the results of such algorithms might be misleading or even wrong.

import pyclesperanto_prototype as cle

import matplotlib.pyplot as plt

from skimage.io import imread

To demonstrate scaling, we’re using a cropped and resampled image data from the Broad Bio Image Challenge: Ljosa V, Sokolnicki KL, Carpenter AE (2012). Annotated high-throughput microscopy image sets for validation. Nature Methods 9(7):637 / doi. PMID: 22743765 PMCID: PMC3627348. Available at http://dx.doi.org/10.1038/nmeth.2083

input_image = imread("../../data/BMP4blastocystC3-cropped_resampled_8bit.tif")

# voxel size is not equal in all directions;

# the voxels are anisotropic.

voxel_size_x = 0.202

voxel_size_y = 0.202

voxel_size_z = 1

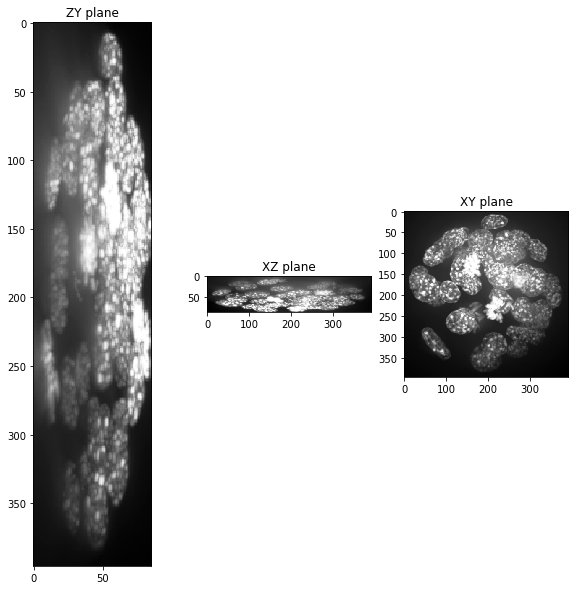

When visualizing projections of that dataset along the three axes, you see that the voxels are not isotropic.

def show(image_to_show, labels=False):

"""

This function generates three projections: in X-, Y- and Z-direction and shows them.

"""

projection_x = cle.maximum_x_projection(image_to_show)

projection_y = cle.maximum_y_projection(image_to_show)

projection_z = cle.maximum_z_projection(image_to_show)

fig, axs = plt.subplots(1, 3, figsize=(10, 10))

cle.imshow(projection_x, plot=axs[0], labels=labels)

cle.imshow(projection_y, plot=axs[1], labels=labels)

cle.imshow(projection_z, plot=axs[2], labels=labels)

axs[0].set_title("ZY plane")

axs[1].set_title("XZ plane")

axs[2].set_title("XY plane")

plt.show()

show(input_image)

Scaling with the voxel size#

The easiest way for fixing this problem is to scale the dataset with its voxel size. Per definition, this will result in a dataset where the voxels are isotropic and have voxel_size = 1 (microns in our case) in all directions.

scale_factor_x = voxel_size_x

scale_factor_y = voxel_size_y

scale_factor_z = voxel_size_z

resampled = cle.scale(input_image,

factor_x=scale_factor_x,

factor_y=scale_factor_y,

factor_z=scale_factor_z,

linear_interpolation=True,

auto_size=True)

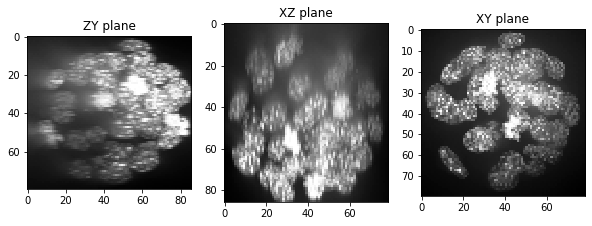

show(resampled)

The resampled stack has now less voxels in X and Y, which might be a problem when it comes to segmenting the objects accurately. We can see this clearly by printing out the shape of the original data and the resampled image. This is the size of the image stack in depth-height-width (Z-Y-X).

input_image.shape

(86, 396, 393)

resampled.shape

(86, 80, 79)

A potential solution is to introduce a zoom_factor. It allows tuning how large the resampled image will be:

zoom_factor = 2

scale_factor_x = voxel_size_x * zoom_factor

scale_factor_y = voxel_size_y * zoom_factor

scale_factor_z = voxel_size_z * zoom_factor

resampled_zoomed = cle.scale(input_image,

factor_x=scale_factor_x,

factor_y=scale_factor_y,

factor_z=scale_factor_z,

linear_interpolation=True,

auto_size=True)

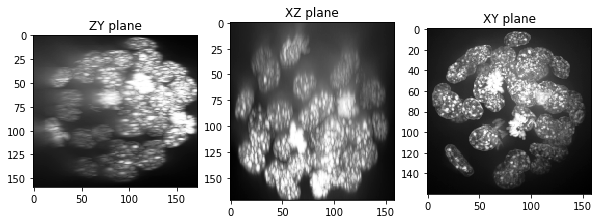

show(resampled_zoomed)

resampled_zoomed.shape

(172, 160, 159)

When zooming/scaling 3D images, keep memory limitations in mind. You can read the size of the images in the box on the right in the following view. Zooming an image by factor 2, like in the example above, increases the image size of a 3D stack by factor 8 (2x2x2).

resampled

|

|

cle._ image

|

resampled_zoomed

|

|

cle._ image

|

Exercise#

Increase the zoom factor and rerun the code above. At which zoom factor does the program crash? How large would the image be that would be generated if it didn’t crash? How much memory does your graphics card have?