Image Normalization#

Normalization is commonly used for preprocessing biological images. It is also commonly used for deep learning-based approaches. During normalization, the range of pixel intensity values is changed. Therefore, it is crucial for differing pixel intensities between images to ensure comparability and quantification of biological features across different images.

However, while normalization can enhance consistency and detectability of patterns, it can also lead to distortion, i.e. suppression or exaggeration of signals, if it is not carefully applied. Finding the balance between noise reduction and risk of losing biological variability is crucial for successfully analyzing images.

This notebook is meant gives an overview of different normalization techniques. In the end, we will investigate possible impacts and risks of normalization that need to be considered.

Overview#

Normalization Technique |

Formula |

Description |

Advantages |

Disadvantages |

|---|---|---|---|---|

Min-Max Normalization |

$\( X' = \frac{X - X_{min}}{X_{max} - X_{min}} \)$ |

Rescales data to fixed range, typically [0, 1]. |

Simple implementation, preserves relationships between data points. |

Sensitivity to outliers can distort data distribution. |

Percentile-Based Normalization |

$\( X' = \frac{X - P_{low}}{P_{high} - P_{low}} \)$ |

Takes into account relative rank of each intensity in the distribution (e.g., 1st and 99th). |

Reduces influence of outliers. |

Requires careful selection of percentiles, may not represent all data well. |

Z-Score Normalization |

$\( Z = \frac{X - \mu}{\sigma} \)$ |

Standardizes data by removing mean and scaling to unit variance. |

Useful for normally distributed data, reduces effect of outliers. |

Assumes normality, may not perform well with non-Gaussian data. |

Techniques#

from skimage.io import imread

import numpy as np

import matplotlib.pyplot as plt

from stackview import insight

For demonstrating how normalization works, we will use blobs.tif as an example image.

image = imread('../../data/blobs.tif')

Now we can use stackview.insight to display the image and essential properties of the image:

insight(image)

|

|

|

We can see that our image is of datatype unsigned 8-bit integer, short uint8. This means:

unsigned: the values are always positive

8-bit: we can have 2^8 = 256 different intensity levels ranging from 0 to 255.

integer: the values are whole numbers

We can also see how these intensity levels are distributed in the intensity histogram. Lets try out different ways to normalize this image:

Min-Max Normalization#

One of the most common ways to normalize data is min-max normalization. Let us try it out:

min_max = (image - image.min()) / (image.max() - image.min())

insight(min_max)

|

|

|

Min-max normalization leads to the transformation of the minimum intensity value of the image into a 0 and the maximum intensity value gets transformed into a 1. Then, every value lies between 0 and 1.

Therefore, we need to be able to represent decimal values and not whole numbers. Consequently, we cannot use a dtype of uint8 anymore but need the floating point representation, here float64.

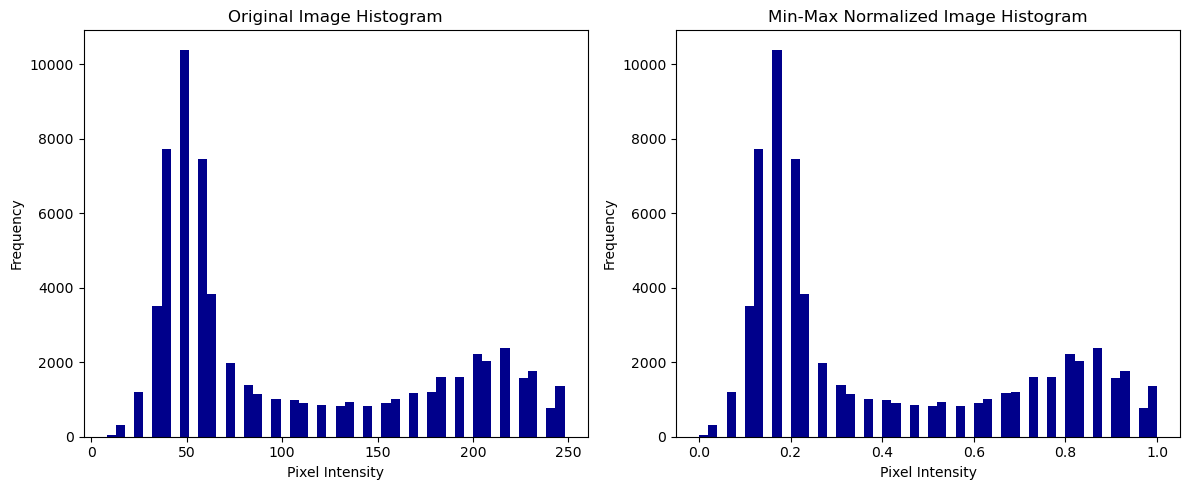

Let us compare the intensity histograms of our original and the min-max-normalized image:

# Plot the histograms

fig, axes = plt.subplots(1, 2, figsize=(12, 5))

# Original image histogram

axes[0].hist(image.ravel(), bins=50, color='darkblue')

axes[0].set_title('Original Image Histogram')

axes[0].set_xlabel('Pixel Intensity')

axes[0].set_ylabel('Frequency')

# Min-max normalized image histogram

axes[1].hist(min_max.ravel(), bins=50, color='darkblue')

axes[1].set_title('Min-Max Normalized Image Histogram')

axes[1].set_xlabel('Pixel Intensity')

axes[1].set_ylabel('Frequency')

plt.tight_layout()

plt.show()

We can see that the two histograms look very similar and the min-max normalization ensures that pixel values are scaled to a common range, here 0 to 1. This is particularly useful when working with different datasets that need to be compared or used, for example for a machine learning algorithm.

Percentile-based Normalization#

Percentile-based normalization takes into account the relative rank of each intensity in the distribution, making it more robust against outliers. This approach leads to less skewing of the distribution as the focus is on where the intensity value lies in the overall distribution.

# Compute percentiles

p1 = np.percentile(image, 1)

p99 = np.percentile(image, 99)

# Perform percentile normalization

percentile_image_unclipped = (image - p1) / (p99 - p1)

insight(percentile_image_unclipped)

|

|

|

Now, we can clip the values to the range [0, 1] for better comparability and visualize the result:

percentile_image_clipped = np.clip(percentile_image_unclipped, 0, 1)

insight(percentile_image_clipped)

|

|

|

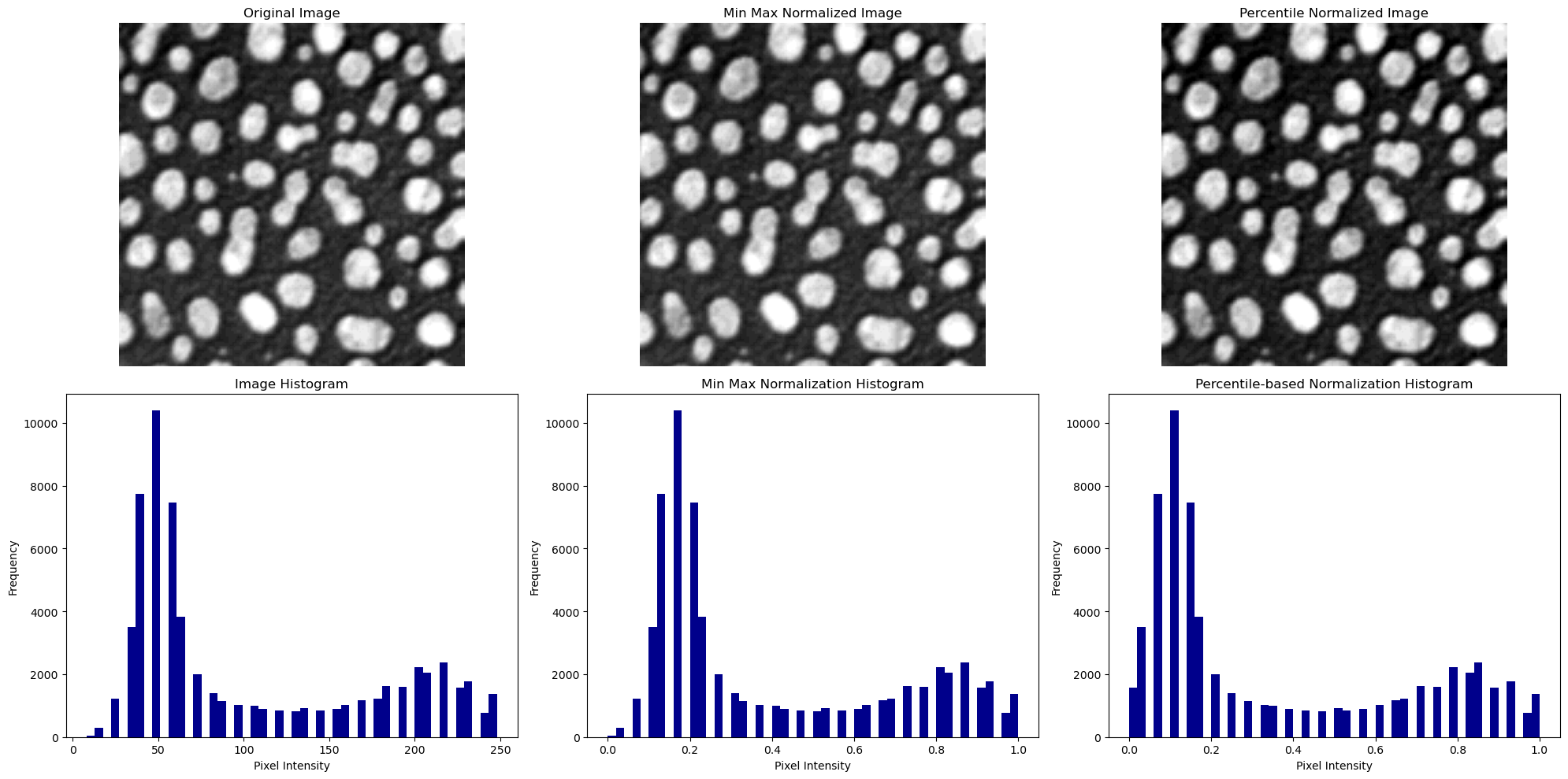

# Create a 2x3 subplot grid (2 rows, 3 columns)

fig, axes = plt.subplots(2, 3, figsize=(20, 10))

# Show the original image

axes[0, 0].imshow(image, cmap='gray')

axes[0, 0].set_title('Original Image')

axes[0, 0].axis('off') # Hide axes

# Show the min-max normalized image

axes[0, 1].imshow(min_max, cmap='gray')

axes[0, 1].set_title('Min Max Normalized Image')

axes[0, 1].axis('off') # Hide axes

# Show the percentile-based normalized image

axes[0, 2].imshow(percentile_image_clipped, cmap='gray')

axes[0, 2].set_title('Percentile Normalized Image')

axes[0, 2].axis('off') # Hide axes

# Original image histogram

axes[1, 0].hist(image.ravel(), bins=50, color='darkblue')

axes[1, 0].set_title('Image Histogram')

axes[1, 0].set_xlabel('Pixel Intensity')

axes[1, 0].set_ylabel('Frequency')

# Min-max normalized image histogram

axes[1, 1].hist(min_max.ravel(), bins=50, color='darkblue')

axes[1, 1].set_title('Min Max Normalization Histogram')

axes[1, 1].set_xlabel('Pixel Intensity')

axes[1, 1].set_ylabel('Frequency')

# Percentile-based normalized image histogram

axes[1, 2].hist(percentile_image_clipped.ravel(), bins=50, color='darkblue')

axes[1, 2].set_title('Percentile-based Normalization Histogram')

axes[1, 2].set_xlabel('Pixel Intensity')

axes[1, 2].set_ylabel('Frequency')

# Adjust layout for better spacing

plt.tight_layout()

plt.show()

We can see that the original image and the min-max normalized image have a very similar appearance and intensity histogram. Because of the clipping, the image which was normalized using percentiles seems to appear with a slightly higher contrast.

Exercise#

Another type of normalization is the Z-score normalization. It transforms the image such that it has a mean of 0 and standard deviation of 1 based on the following formula:

How would you implement the z-score normalization in python?

Impact and risk of normalization#

nuclei = imread('../../data/BBBC007_batch/17P1_POS0013_D_1UL.tif')

insight(nuclei)

|

|

|

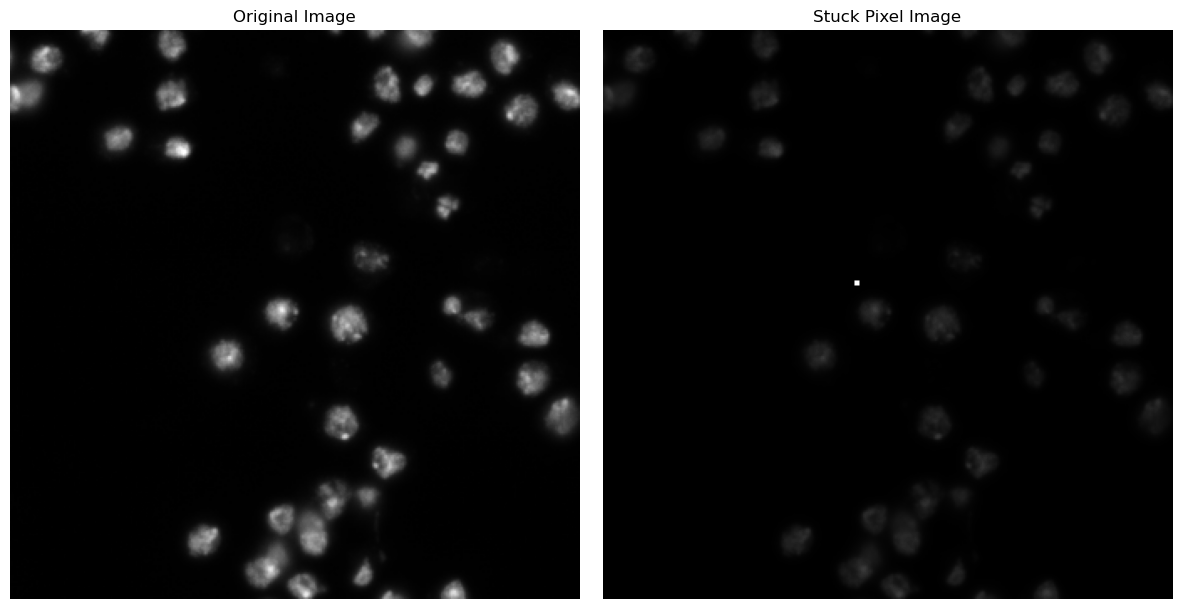

Now, imagine that during image acquisition, there was a pixel that failed to change color as expected. This is a common problem in microscopy. Hereby, we can decide between “dead pixels” (remain permanently black) and “stuck pixels” (remain permanently stuck in a bright state).

nuclei_stuck = nuclei.copy()

nuclei_stuck[150:153, 150:153] = 1000

insight(nuclei_stuck)

|

|

|

We can see that our initial distribution is now skewed because the outlier is so far off the other values.

# show nuclei and nuclei_stuck side by side

fig, axes = plt.subplots(1, 2, figsize=(12, 6))

axes[0].imshow(nuclei, cmap='gray')

axes[0].set_title('Original Image')

axes[0].axis('off')

axes[1].imshow(nuclei_stuck, cmap='gray')

axes[1].set_title('Stuck Pixel Image')

axes[1].axis('off')

plt.tight_layout()

plt.show()

This results in a lack of contrast in the case with the stuck pixel. Let us investigate how it impacts the min-max normalization.

min_max_stuck = (nuclei_stuck - nuclei_stuck.min()) / (nuclei_stuck.max() - nuclei_stuck.min())

insight(min_max_stuck)

|

|

|

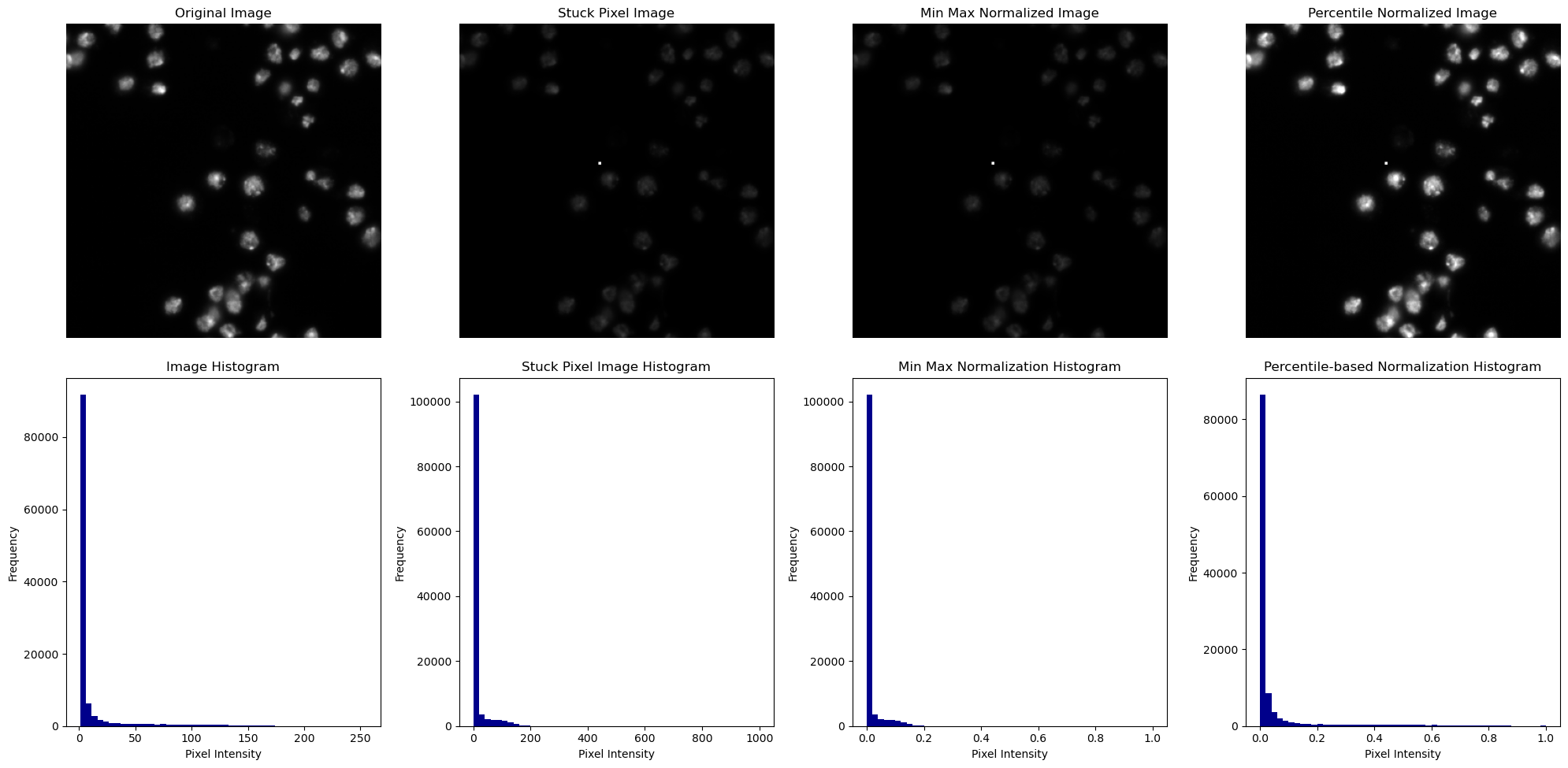

Min-max normalization is highly sensitive to these kind of outliers as it takes into account the minimum and maximum intensity value to determine the range of normalization. But how about percentile-based normalization?

p1_stuck = np.percentile(nuclei_stuck, 0.1)

p99_stuck = np.percentile(nuclei_stuck, 99.9)

percentile_stuck_unclipped = (nuclei_stuck - p1_stuck) / (p99_stuck - p1_stuck)

insight(percentile_stuck_unclipped)

|

|

|

You can also play around with the percentiles, I chose here 0.1 and 99.9. Now, let us clip the image in the range [0,1]:

percentile_stuck_clipped = np.clip(percentile_stuck_unclipped, 0, 1)

insight(percentile_stuck_clipped)

|

|

|

# Create a 2x3 subplot grid (2 rows, 3 columns)

fig, axes = plt.subplots(2, 4, figsize=(20, 10))

# Show the original image

axes[0, 0].imshow(nuclei, cmap='gray')

axes[0, 0].set_title('Original Image')

axes[0, 0].axis('off') # Hide axes

# Show the image with a stuck pixel

axes[0, 1].imshow(nuclei_stuck, cmap='gray')

axes[0, 1].set_title('Stuck Pixel Image')

axes[0, 1].axis('off') # Hide axes

# Show the min-max normalized image

axes[0, 2].imshow(min_max_stuck, cmap='gray')

axes[0, 2].set_title('Min Max Normalized Image')

axes[0, 2].axis('off') # Hide axes

# Show the percentile-based normalized image

axes[0, 3].imshow(percentile_stuck_clipped, cmap='gray')

axes[0, 3].set_title('Percentile Normalized Image')

axes[0, 3].axis('off') # Hide axes

# Original image histogram

axes[1, 0].hist(nuclei.ravel(), bins=50, color='darkblue')

axes[1, 0].set_title('Image Histogram')

axes[1, 0].set_xlabel('Pixel Intensity')

axes[1, 0].set_ylabel('Frequency')

# Stuck pixel image histogram

axes[1, 1].hist(nuclei_stuck.ravel(), bins=50, color='darkblue')

axes[1, 1].set_title('Stuck Pixel Image Histogram')

axes[1, 1].set_xlabel('Pixel Intensity')

axes[1, 1].set_ylabel('Frequency')

# Min-max normalized image histogram

axes[1, 2].hist(min_max_stuck.ravel(), bins=50, color='darkblue')

axes[1, 2].set_title('Min Max Normalization Histogram')

axes[1, 2].set_xlabel('Pixel Intensity')

axes[1, 2].set_ylabel('Frequency')

# Percentile-based normalized image histogram

axes[1, 3].hist(percentile_stuck_clipped.ravel(), bins=50, color='darkblue')

axes[1, 3].set_title('Percentile-based Normalization Histogram')

axes[1, 3].set_xlabel('Pixel Intensity')

axes[1, 3].set_ylabel('Frequency')

# Adjust layout for better spacing

plt.tight_layout()

plt.show()

Summary#

What can we learn from this? If an image contains very dark or, like in our case, bright pixels (e.g. due to noise or artifacts), these extreme values stretch the normalization range, causing most of the pixel values to be squeezed into a narrow range. This compression results in a lower contrast in the rest of the image. A way to circumvent this behavior is to use Percentile-based Normalization. Percentile-based Normalization takes into account the relative rank of each intensity in the distribution, making it more robust against outliers. This approach leads to less skewing of the distribution as the focus is on where the intensity value lies in the overall distribution.